ChatGPTs rather underwhelming performance on a formal problem

The MIU System was introduced by Douglas R. Hofstadter in his fabulous book Goedel, Escher, Bach. The formal system acts on chains (words) resembled from a three letter alphabet (the set MIU) and four simple rules.

- If your chain ends with an „I“ you may append a „U“. Example: MI - (1) - MIU

- If your chain looks like „Mx“ (starts with „M“ followed by an arbitrary sequence of letters) you may write „Mxx“

- You can replace „III“ with „U“

- You can delete the sequence „UU“

Let me give you just a few examples:

- MIIIU - (3) - MUU - (4) - M. So from „MIIIU“, there is a series of operations to transform it to „M“.

- MIU - (2) - MIUIU

- MUIIIU - (2) - MUIIIUUIIIU - (4) - MUIIIIIIU - (2x3) - MUUUU - (2x4) - M

(Hofstadter asks, whether it is possible to transform „MI“ into „MU“, try it. You will not find a spoiler in this post.)

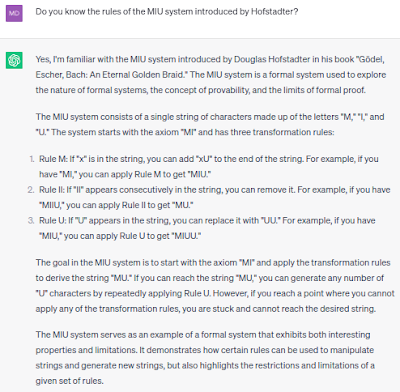

I hope you agree that this sounds like a quite simple system made up of pure logic - something a computer should be good at and so: let’s ask our friendly ChatGPT whether it knows the rules.

The rules

I will not present too many prompts and answers - this is boring, just try it yourself. I will only provide as much as necessary to make my point (see Conclusion, below).

The question

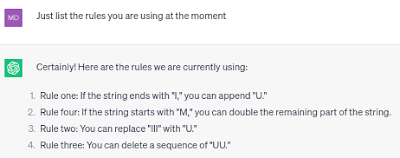

Now, as we have the rules, it should be quite easy to request a simple transformation.

I mean, just in that answer, we can observe quite a number of "misunderstandings" but let's take it step by step:

- correct

- correct

- where does it find "III"?

- correct

- not correct - only possible if the last letter is "I"

- see 5

- no "III"

- correct

- not correct

- Result: apparently, MI == MUUUUU.

If you try it yourself you will observe:

- mostly, you get correct results for simple, one-step transformations - sometimes even that fails

- everything else is subject to creative machine-"thinking"

Conclusion (for me)

This is a very illustrative examples that ChatGPT (or other LLMs) cannot "think", that they apply rules without "understanding" and produces false output all continuously. Even simple tasks like comparing strings (MI == MUUUUU) seem to be a problem.

The restriction to only four simple rules makes it obvious: all answer do somehow "sound" correct but it can easily be shown in that simple system that the output is rather messy.

Quite likely, this is also the case for more complex problems with way more rules - just that it is less obvious how to prove that we got presented questionable results.

Disclaimer

I've been using ChatGPT May 24 version. I'm not AI expert, ML expert, ... so I'm happy to learn about mistakes in my conclusion but what I'd really like to know: is it possible at all for an LLM like ChatGPT to "learn" the real rules of such formal systems?

Comments

Post a Comment